The Rise of ChatGPT & GenAI and What it Means for Cybersecurity

The rise of ChatGPT and Generative AI has swept the world by storm. It has left no stone unturned and has strong implications for cybersecurity and SecOps. The big reason for this is that cybercriminals now use GenAI to increase the potency and frequency of their attacks on organizations. To cope with this, security teams naturally need to adapt and are looking for ways to leverage AI to counter these attacks in a similar fashion. Given the frequency of attacks, security teams need to find ways to continuously manage the externally visible attack surface of their organization, and look for opportunities for automation wherever possible. That’s what we discuss in this article – examples of how ChatGPT and GenAI are being used in cyberwarfare, and how security teams can keep pace with the changing times.

In this article

The rise of GenAI & ChatGPT in cybercrime

There are many examples of organizations looking for ways to leverage ChatGPT to write code for basic applications, reduce the amount of boilerplate code developers need to write, or build an MVP with little to no effort. This is the good side of GenAI.

However, cybercriminals are usually the first to take notice of such advances and are quick to find ways to put GenAI to use in their operations as well. Here are some examples of the bad side:

Building a zero-day virus using ChatGPT prompts

With a bit of creativity, it’s very easy to bypass ChatGPT’s default guardrails and make it a handy helper to create a zero-day virus.

ChatGPT + developer mode = danger

Japanese analysts use ChatGPT in developer mode to create a virus in minutes, highlighting its potential for misuse.

ChatGPT used to write malicious code

As a glimmer of hope, some researchers have found that its skills are still rudimentary and it takes a seasoned cybercriminal to really put it to good use. Not all that reassuring perhaps, but the key point is that ChatGPT lowers the bar for entry as a cybercriminal and can be badly misused if in the wrong hands.

These examples are enough for us to sit up and take notice of what’s at stake with ChatGPT and GenAI as incidents of AI and machine learning in cybersecurity are on the rise..

Incidents of AI risks in cybersecurity

There have been innumerable reports on ChatGPT and GenAI being used in cybercrime. What kinds of attacks are commonly seen?

- Brute force attacks

AI is being used to crack passwords using multiple methods including brute force. The shorter the password, the easier it is to crack. A 6-digit password would take seconds for AI to crack. Along with brute force attacks, other password-guessing techniques like acoustic side-channel attacks and dictionary attacks can be used to wreak even more havoc.

- Building spyware and malware at scale

The FBI warns that AI is already being used to carry out malware attacks. They sound the alarm that AI is being used in multiple ways to write code, create malware, and create deepfakes at scale. On a similar note, Britain’s spy agency calls out AI’s use “for advanced malware generation.”

Facebook parent company Meta, reports that they found 6 spyware networks tied to 8 companies across the world. Another report found that Android spyware has drastically risen in 2023. These are not isolated incidents, but a clear warning that ChatGPT and GenAI are a real threat when it comes to spyware and malware.

- Deepfake & impersonation

A deepfake is a fake or digitally altered video of a person that’s used to scam viewers into taking some action like sharing their passwords, IDs, or transferring money to an account. In a huge case of such fraud, a deepfake video of a CFO was used to extract $25M from an organization. In like manner, bots were used to impersonate profiles on X to promote fake cryptocurrencies and even gain access to users’ crypto wallets. Deepfake videos have been used for election manipulation in India, and are a clear threat to the upcoming 2024 US elections as well.

- Credential cracking

As mentioned earlier AI can crack weak passwords in seconds. But there’s more to AI’s prowess with login cracking than just brute force. AI is being used to create scam websites at scale. Along with ChatGPT for text generation, DALL-E, also from OpenAI, is equally good at creating images and artwork that can be used to create these same websites with ease. WhatsApp, the widely-used chat mobile app, hasn’t been spared either – with reports of fake WhatsApp login websites surfacing. And then there’s the ol’ email phishing menace that’s not to be forgotten. It’s one of the top cyber risk trends we’ve highlighted in the past.

How AI empowers cybercriminals

The real-world examples above show how ChatGPT and GenAI are used by cybercriminals. But here’s what we can observe from all these reports:

Attackers are using AI to be smarter and faster

Cybercriminals have limited resources, and are always on the lookout for new technologies and advancements that can give them an edge. ChatGPT and GenAI is the perfect tool that can help them get more done in less time. Whether it’s writing code, creating websites, deepfake videos, or creating malware, attackers are much more capable when equipped with GenAI.

Even novices can leverage AI to automate attacks

ChatGPT and GenAI gives anyone the opportunity to become a hacker, no matter their skill level. While this is empowering when used for good, it is dangerous when motivations are suspect. Because of this lower bar for entry, there’s likely to be a sharp spike in the number of cybercriminals worldwide, and an equally large spike in the number of cyberattacks.

AI empowers traditional attacks & introduces new ones

Brute force attacks, malware, spyware, impersonation, and login spoofing are all age-old attempts to steal, blackmail, and extort money from organizations and people. However, AI multiplies the impact of these types of attacks making them faster, more powerful, and harder to detect and fight. What’s more, AI is introducing new types of attacks like deepfakes.

We’ve described the challenge in great detail so far. It’s now time to transition to talking about the solution – and yes, there is hope despite the bad news.

AI cybersecurity threats call for proactive, not reactive cybersecurity

Reactive, detect-and-response solutions are challenged by the pace, scale, and sophistication of these AI threats. This makes proactive cybersecurity and especially threat exposure management more important than ever. Being proactive means being able to spot attacks before or as soon as they start, and being able to respond to them in real-time to minimize any impact. Further, it’s about improving your organization’s security posture, considering AI and cybersecurity threats.

Using AI to fight AI threats

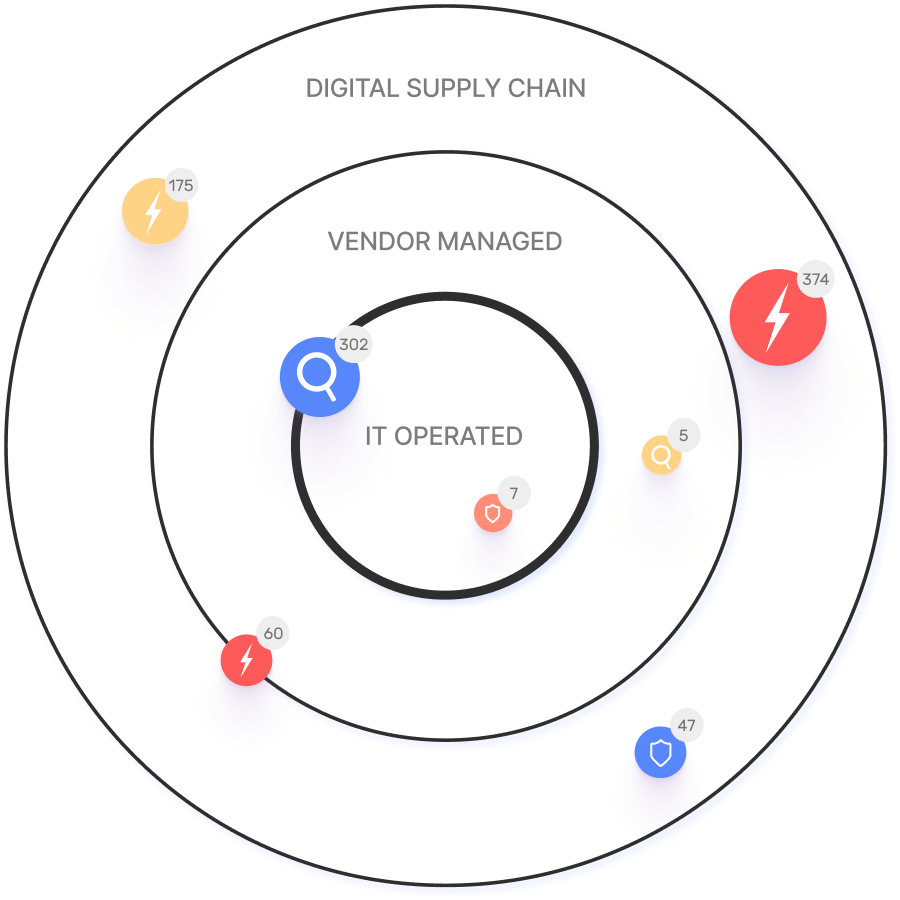

As the old saying goes, “it takes one to know one” – to fight AI-backed hackers, you need to think and act like one. You need to view your organization’s digital supply chain from the outside-in, the way they see it. Here are four ways to do this:

- Discovering the real attack surface

AI can be used to gain a more detailed, and accurate view of your organization’s real attack surface – the entire area of your organization that is externally visible. AI can be used to look at a variety of factors and identify legitimate assets that belong to your organization with a high level of confidence. AI can analyze and attribute relevance, or determine risky connections to assets that humans can’t, and do this much faster than traditional cybersecurity software can.

- Detecting anomalies in real-time

AI is great at pattern recognition even ones that simple rules-based tools can’t spot. This is because AI makes connections between data points that go beyond simple rules. This can be used to detect suspicious activity in networking, for example.

Threat exposure management relies on millions of points of data. AI can help scan, filter, sort, categorize, and prioritize cybersecurity data including logs, metrics, alerts, and more. AI can enable you to use policies to layer on many rules one on top of another for a robust threat detection strategy.

- Automating and scaling remediation

AI can be used to make informed decisions on how to respond to a threat. It can be used to better prioritize alerts, suppress false positives, highlight high-priority threats, and suggest a workflow for a threat response. Even more, AI can be used for attack surface management automation to respond to and remediate threats without human intervention. It’s still early days for auto-remediation, but there is immense potential.

- Increase ROI in cybersecurity investments

AI can reduce the cost of cybersecurity by reducing the amount of human effort required, and by reducing the number of tools required. AI can perform complex tasks in a repeatable manner. Unlike us humans that need rest, AI can work around the clock. And importantly, AI gets smarter over time. All these factors will contribute to greater efficiencies and reduce costs in cybersecurity activities – all resulting in better ROI for cybersecurity investments.

What are the challenges in adopting AI?

We’re in the days of AI’s infancy. There are teething pains to deal with along the way. Here are some of them:

AI is new to everyone

There are things that even OpenAI, the builders of ChatGPT, can’t predict about the tool they’ve created and its capabilities. This being the case, it’s going to take years to fully understand the potential of AI, let alone using it to that potential. For organizations that are busy with building products and getting them to market, they are unable to find the time to learn & adopt the new technology. It takes focus away from the core business, and many still see it as a thing of the future.

Unknown unknowns are a real thing with GenAI

As ex-Defense Secretary, Donal Rumsfeld, famously said, “there are also unknown unknowns – [things] we don’t know we don’t know.” This is doubly true of ChatGPT and GenAI. A fear that was only in sci-fi books of machines functioning on their own and introducing new risks they weren’t designed to do is now possibly coming true. OpenAI is still grappling with how to ensure their creation doesn’t flout laws and cause privacy issues.

Can AI replace a human in cybersecurity risk prevention?

The direct answer is ‘No’. Most cybersecurity systems that utilize AI are still “human in the loop” use cases. AI is powerful and has a lot of potential, but it has a long way to go until it has the situational awareness of a human being. Let’s look at the reasons why.

Risks of relying on AI

Lack of transparency

Due to concerns around transparency with AI, a study found that 41% of executives banned the use of AI in their organizations. It’s clear that AI needs direction & oversight, and some organizations are not willing to take on the risks that come with AI just yet. For example, machine learning algorithms that discover assets and assess their cyber-risk are extremely useful for speeding up discovery. But customers of cybersecurity products want to understand the process of asset allocation and do not want to simply rely on, “this is likely to be your risky asset because the model says it is.” Showing transparency in AI will be key to AI adoption for cybersecurity teams.

Creating too many false-positives

AI models used in cyber are sometimes so good at finding and analyzing cyber data that they can create false-positives, or cases where the model identifies something as risky, but the risk is either not there anymore or not risky enough to be relevant. We need to get AI to the point where training the models with human input can do a better job of reducing false positives.

Get in touch with us to discuss how you can leverage the power of AI to improve your security posture, and how IONIX uses Machine Learning in our discovery and risk assessment processes.